Development Timeline

PG-Pad-Aug d50-200e

Model 1This model uses a Progressive Growing (PG) architecture with padding and data augmentation, trained for 200 epochs.

PGv4-RefPad d50-300e

Model 2The 4th version of the PG model, using reflection padding. It was trained for 300 epochs.

PGv5-RefPad d50-300e

Model 3The 5th version of the PG model, also using reflection padding and trained for 300 epochs.

PG-Aug d50-200e

Model 4This iteration returns to the original PG architecture with augmentation, trained for 200 epochs.

Std d25-150e

Model 5A standard (non-progressive) architecture with a dataset split of 25%, trained for 150 epochs.

Std-VLR-L d48-250e

Model 6This standard model uses a very low learning rate (VLR) and a larger dataset split (48%), trained for 250 epochs.

Project Overview

This case study chronicles a multi-month project to build and refine a Generative Adversarial Network (GAN) for creating high-resolution j-card “jacket” designs. This project was inspired by frustration with the high cost and labor that goes into manually reformatting game art, especially given the high price these pieces can fetch.

The project addresses a specific, monotonous, and labor-intensive problem: the translation of three-part Nintendo 3DS game case covers into j-card layouts. When done manually, this process can take 20-45 minutes per cover. As a case in point, creating just 37 covers for the mainline Pokémon games took roughly two weeks of on-and-off work. The goal was to build a deep learning pipeline that could automate this task intelligently.

The core solution is an ETL pipeline powered by Pix2Pix, a conditional GAN framework known for image-to-image translation. The process involved scraping 157 high-quality 3DS cover scans using game serial IDs from databases like 3dsdb and GameTDB. These source images were paired with 50 manually created "target" images, which served as the ground truth for training. The project was intentionally scoped to 3DS covers to ensure a manageable and high-quality dataset could be sourced.

Key challenges included developing a semi-automated GUI tool using OpenCV for consistent image segmentation and navigating the iterative process of model training and refinement. The project ultimately trained eight specialized sub-models in a human-in-the-loop cycle, underscoring a core lesson: robust data preparation is often more critical than complex architectural changes. Currently, the top-performing model achieves about 80% of the desired output, with a clear path to improvement by expanding the training set.

Methodology Highlights

- Pix2Pix Architecture: Utilized a U-Net based Generator and a PatchGAN Discriminator for the image translation task.

- Structured Data Acquisition: Sourced an initial dataset of 157 covers from GameTDB and 3dsdb.

- Semi-Automated Segmentation: Built an interactive tool with OpenCV for edge detection, segmenting images into 6 sections.

- Specialized Sub-Models: Trained 8 distinct sub-models to handle different components of the j-card design.

- Accelerated Training: Leveraged Google Colab Pro with A100 GPUs and enabled mixed-precision for significant speed gains.

- Human-in-the-Loop Feedback: Used an iterative HITL cycle for continuous model refinement and improvement.

ETL Process

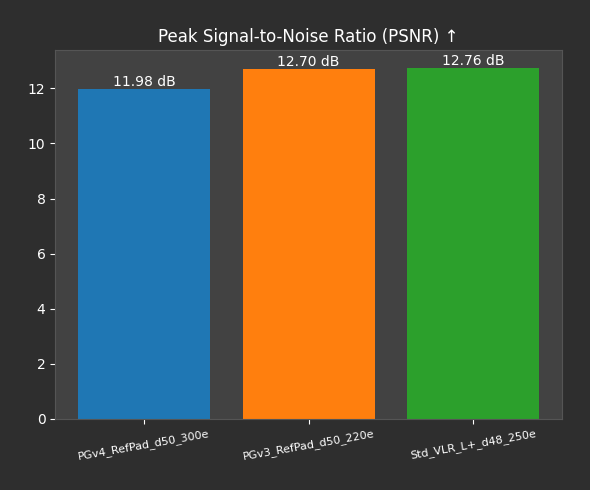

Quantitative Results

PSNR Comparison

Peak Signal-to-Noise Ratio (PSNR) measures image quality by comparing the maximum possible power of a signal to the power of corrupting noise. Higher PSNR values generally indicate higher quality reconstructions.

SSIM Comparison

The Structural Similarity Index Measure (SSIM) assesses the perceptual difference between two images. It is better aligned with human visual perception than PSNR. A value closer to 1 indicates a higher similarity.